參考

https://www.toradex.cn/blog/nxp-imx8ji-yueiq-kuang-jia-ce-shi-machine-learning

IMX-MACHINE-LEARNING-UG.pdf

CPU和NPU圖像分類(lèi)

cd /usr/bin/tensoRFlow-lite-2.4.0/examples

CPU運(yùn)行

./label_image -m mobilenet_v1_1.0_224_quant.tflite -i grace_hopper.bmp -l labels.txt

INFO: Loaded model mobilenet_v1_1.0_224_quant.tflite

INFO: resolved reporter

INFO: invoked

INFO: averagetime:50.66ms

INFO: 0.780392: 653 military unIForm

INFO: 0.105882: 907 Windsor tie

INFO: 0.0156863: 458 bow tie

INFO: 0.0117647: 466 bulletproof vest

INFO: 0.00784314: 835 suit

GPU/NPU加速運(yùn)行

./label_image -m mobilenet_v1_1.0_224_quant.tflite -i grace_hopper.bmp -l labels.txt-a 1

INFO: Loaded model mobilenet_v1_1.0_224_quant.tflite

INFO: resolved reporter

INFO: Created TensorFlow Lite delegate for NNAPI.

INFO: Applied NNAPI delegate.

INFO: invoked

INFO: average time:2.775ms

INFO: 0.768627: 653 military uniform

INFO: 0.105882: 907 Windsor tie

INFO: 0.0196078: 458 bow tie

INFO: 0.0117647: 466 bulletproof vest

INFO: 0.00784314: 835 suit

USE_GPU_INFERENCE=0./label_image -m mobilenet_v1_1.0_224_quant.tflite -i grace_hopper.bmp -l labels.txt--external_delegate_path=/usr/lib/libvx_delegate.so

Python運(yùn)行

python3 label_image.py

INFO: Created TensorFlow Lite delegate for NNAPI.

Applied NNAPI delegate.

WARM-up time:6628.5ms

Inference time: 2.9 ms

0.870588: military uniform

0.031373: Windsor tie

0.011765: mortarboard

0.007843: bow tie

0.007843: bulletproof vest

基準(zhǔn)測(cè)試CPU單核運(yùn)行

./benchmark_model --graph=mobilenet_v1_1.0_224_quant.tflite

STARTING!

Log parameter values verbosely: [0]

Graph: [mobilenet_v1_1.0_224_quant.tflite]

Loaded model mobilenet_v1_1.0_224_quant.tflite

The input model file size (MB): 4.27635

Initialized session in 15.076ms.

Running benchmark for at least 1 iterations and at least 0.5 seconds but terminate if exceeding 150 seconds.

count=4 first=166743 curr=161124 min=161054 max=166743avg=162728std=2347

Running benchmark for at least 50 iterations and at least 1 seconds but terminate if exceeding 150 seconds.

count=50 first=161039 curr=161030 min=160877 max=161292 avg=161039std=94

Inference timings in us: Init: 15076, First inference: 166743, Warmup (avg):162728, Inference (avg):161039

Note: as the benchmark tool itself affects memory footprint, the following is only APPROXIMATE to the actual memory footprint of the model at runtime. Take the information at your discretion.

Peak memory footprint (MB): init=2.65234 overall=9.00391

CPU多核運(yùn)行

./benchmark_model --graph=mobilenet_v1_1.0_224_quant.tflite --num_threads=4

4核--num_threads設(shè)置為4性能最好

STARTING!

Log parameter values verbosely: [0]

Num threads: [4]

Graph: [mobilenet_v1_1.0_224_quant.tflite]

#threads used for CPU inference: [4]

Loaded model mobilenet_v1_1.0_224_quant.tflite

The input model file size (MB): 4.27635

Initialized session in 2.536ms.

Running benchmark for at least 1 iterations and at least 0.5 seconds but terminate if exceeding 150 seconds.

count=11 first=48722 curr=44756 min=44597 max=49397 avg=45518.9 std=1679

Running benchmark for at least 50 iterations and at least 1 seconds but terminate if exceeding 150 seconds.

count=50 first=44678 curr=44591 min=44590 max=50798avg=44965.2std=1170

Inference timings in us: Init: 2536, First inference: 48722, Warmup (avg):45518.9, Inference (avg):44965.2

Note: as the benchmark tool itself affects memory footprint, the following is only APPROXIMATE to the actual memory footprint of the model at runtime. Take the information at your discretion.

Peak memory footprint (MB): init=1.38281 overall=8.69922

GPU/NPU加速

./benchmark_model --graph=mobilenet_v1_1.0_224_quant.tflite --num_threads=4 --use_nnapi=true

STARTING!

Log parameter values verbosely: [0]

Num threads: [4]

Graph: [mobilenet_v1_1.0_224_quant.tflite]

#threads used for CPU inference: [4]

Use NNAPI: [1]

NNAPI accelerators available: [vsi-npu]

Loaded model mobilenet_v1_1.0_224_quant.tflite

INFO: Created TensorFlow Lite delegate for NNAPI.

Explicitly applied NNAPI delegate, and the model graph will be completely executed by the delegate.

The input model file size (MB): 4.27635

Initialized session in 3.968ms.

Running benchmark for at least 1 iterations and at least 0.5 seconds but terminate if exceeding 150 seconds.

count=1 curr=6611085

Running benchmark for at least 50 iterations and at least 1 seconds but terminate if exceeding 150 seconds.

count=369 first=2715 curr=2623 min=2572 max=2776avg=2634.2std=20

Inference timings in us: Init: 3968, First inference: 6611085, Warmup (avg): 6.61108e+06, Inference (avg): 2634.2

Note: as the benchmark tool itself affects memory footprint, the following is only APPROXIMATE to the actual memory footprint of the model at runtime. Take the information at your discretion.

Peak memory footprint (MB): init=2.42188 overall=28.4062

結(jié)果對(duì)比

| CPU運(yùn)行 | CPU多核多線程 | NPU加速 | |

| 圖像分類(lèi) | 50.66 ms | 2.775 ms | |

| 基準(zhǔn)測(cè)試 | 161039uS | 44965.2uS | 2634.2uS |

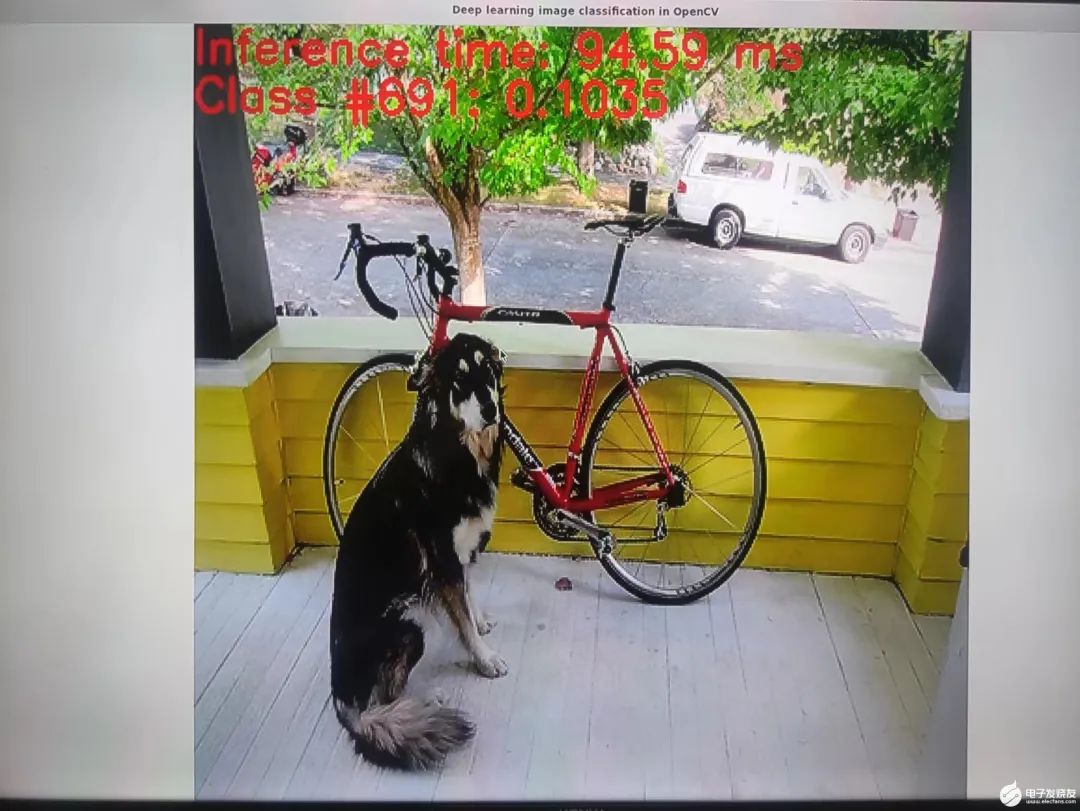

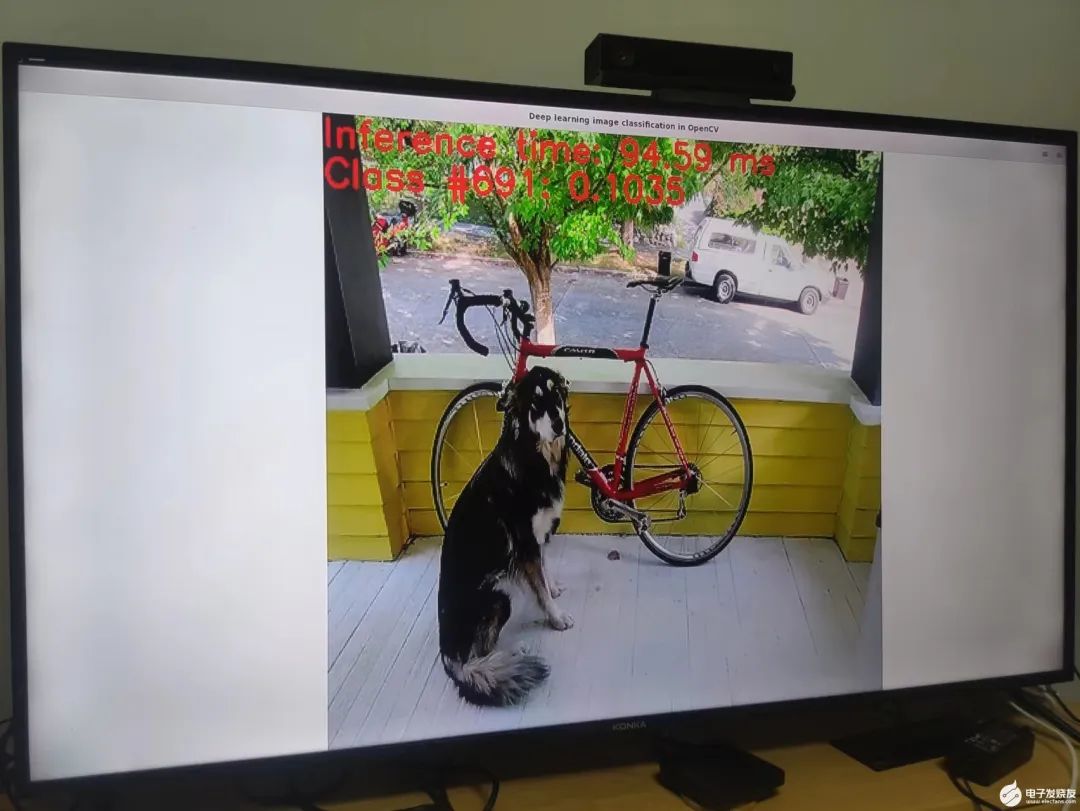

OpenCV DNN

cd /usr/share/OpenCV/samples/bin

./example_dnn_classification --input=dog416.png --zoo=models.yml squeezenet

下載模型

cd /usr/share/opencv4/testdata/dnn/

python3 download_models_basic.py

圖像分類(lèi)

cd /usr/share/OpenCV/samples/bin

./example_dnn_classification --input=dog416.png --zoo=models.yml squeezenet

文件瀏覽器地址欄輸入

ftp://ftp.toradex.cn/Linux/i.MX8/eIQ/OpenCV/Image_Classification.zip

下載文件

解壓得到文件models.yml和squeezenet_v1.1.caffemodel

cd /usr/share/OpenCV/samples/bin

將文件導(dǎo)入到開(kāi)發(fā)板的/usr/share/OpenCV/samples/bin目錄下

$cp/usr/share/opencv4/testdata/dnn/dog416.png /usr/share/OpenCV/samples/bin/

$cp/usr/share/opencv4/testdata/dnn/squeezenet_v1.1.prototxt /usr/share/OpenCV/samples/bin/

$cp/usr/share/OpenCV/samples/data/dnn/classification_classes_ILSVRC2012.txt /usr/share/OpenCV/samples/bin/

$ cd /usr/share/OpenCV/samples/bin/

圖片輸入

./example_dnn_classification --input=dog416.png --zoo=models.yml squeezenet

報(bào)錯(cuò)

root@myd-jx8mp:/usr/share/OpenCV/samples/bin# ./example_dnn_classification --input=dog416.png --zoo=model.yml squeezenet

ERRORS:

Missing parameter: 'mean'

Missing parameter: 'rgb'

加入?yún)?shù)--rgb 和 --mean=1

還是報(bào)錯(cuò)加入?yún)?shù)--mode

root@myd-jx8mp:/usr/share/OpenCV/samples/bin# ./example_dnn_classification --rgb --mean=1 --input=dog416.png --zoo=models.yml squeezenet

[WARN:0]global/usr/src/debug/opencv/4.4.0.imx-r0/git/modules/videoio/src/cap_gstreamer.cpp (898) open OpenCV | GStreamer warning: unable to query duration of stream

[WARN:0]global/usr/src/debug/opencv/4.4.0.imx-r0/git/modules/videoio/src/cap_gstreamer.cpp (935) open OpenCV | GStreamer warning: Cannot query video position: status=1, value=0, duration=-1

root@myd-jx8mp:/usr/share/OpenCV/samples/bin#./example_dnn_classification --rgb --mean=1 --input=dog416.png --zoo=models.yml squeezenet --mode

[WARN:0]global/usr/src/debug/opencv/4.4.0.imx-r0/git/modules/videoio/src/cap_gstreamer.cpp (898) open OpenCV | GStreamer warning: unable to query duration of stream

[WARN:0]global/usr/src/debug/opencv/4.4.0.imx-r0/git/modules/videoio/src/cap_gstreamer.cpp (935) open OpenCV | GStreamer warning: Cannot query video position: status=1, value=0, duration=-1

視頻輸入

./example_dnn_classification --device=2 --zoo=models.yml squeezenet

問(wèn)題

如果testdata目錄下沒(méi)有文件,則查找下

lhj@DESKTOP-BINN7F8:~/myd-jx8mp-yocto$ find . -name "dog416.png"

./build-xwayland/tmp/work/cortexa53-crypto-mx8mp-poky-linux/opencv/4.4.0.imx-r0/extra/testdata/dnn/dog416.png

再將相應(yīng)的文件復(fù)制到開(kāi)發(fā)板

cd./build-xwayland/tmp/work/cortexa53-crypto-mx8mp-poky-linux/opencv/4.4.0.imx-r0/extra/testdata/

tar -cvf /mnt/e/dnn.tar ./dnn/

cd/usr/share/opencv4/testdata目錄不存在則先創(chuàng)建

rz導(dǎo)入dnn.tar

解壓tar -xvf dnn.tar

terminate calLEDafter throwing an instance of 'cv::Exception'

what():OpenCV(4.4.0)/usr/src/debug/opencv/4.4.0.imx-r0/git/samples/dnn/classification.cpperrorAssertion failed) !model.empty() in function 'main'

Aborted

lhj@DESKTOP-BINN7F8:~/myd-jx8mp-yocto/build-xwayland$ find . -name classification.cpp

lhj@DESKTOP-BINN7F8:~/myd-jx8mp-yocto/build-xwayland$ cp ./tmp/work/cortexa53-crypto-mx8mp-poky-linux/opencv/4.4.0.imx-r0/packages-split/opencv-src/usr/src/debug/opencv/4.4.0.imx-r0/git/samples/dnn/classification.cpp /mnt/e

lhj@DESKTOP-BINN7F8:~/myd-jx8mp-yocto/build-xwayland$

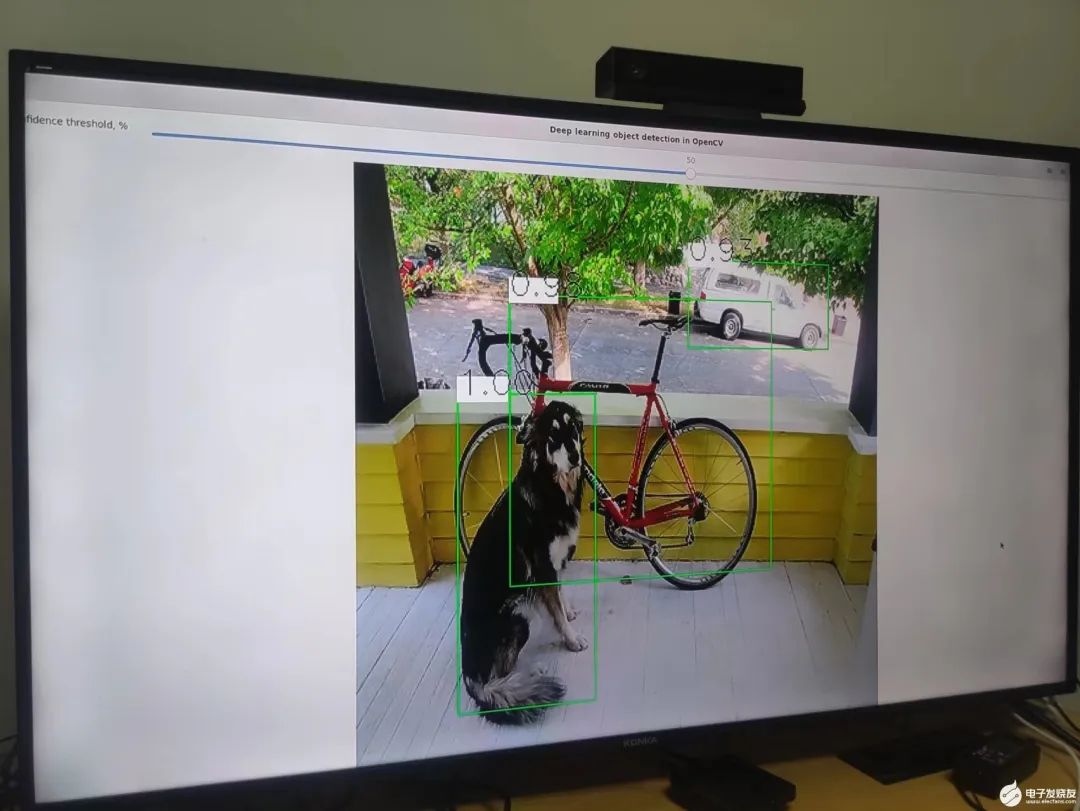

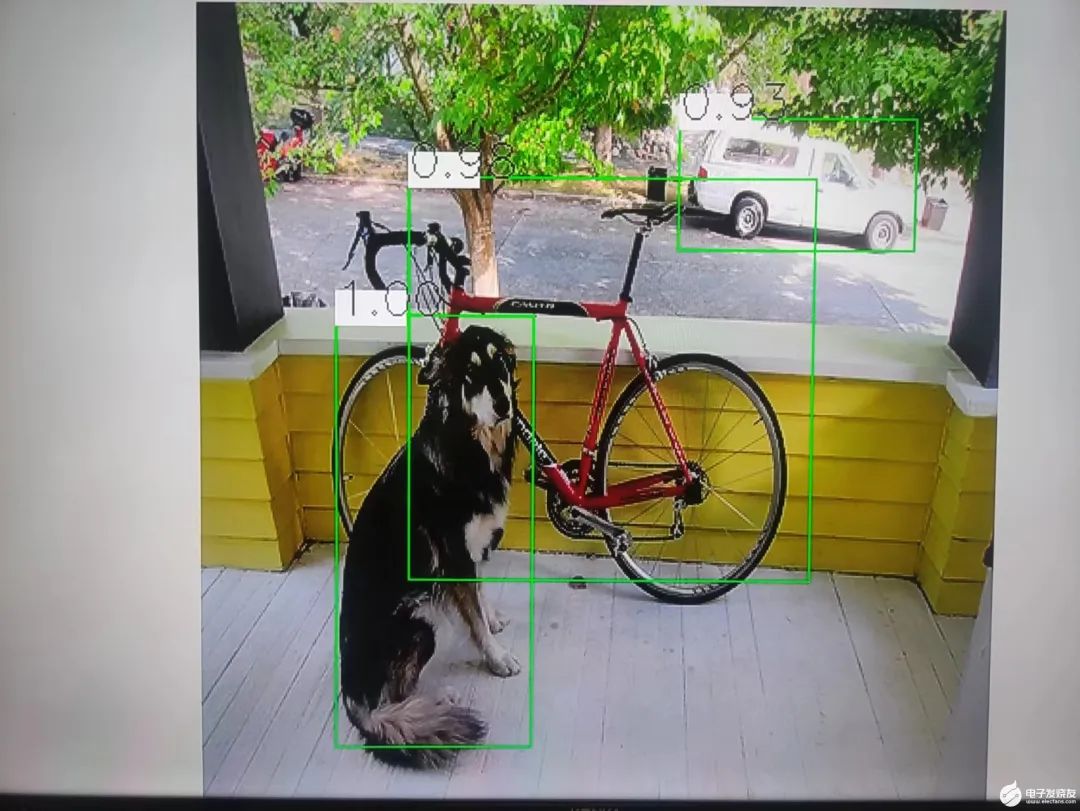

YOLO對(duì)象檢測(cè)

cd /usr/share/OpenCV/samples/bin

./example_dnn_object_detection --width=1024 --height=1024 --scale=0.00392 --input=dog416.png --rgb --zoo=models.yml yolo

https://pjreddie.com/darknet/yolo/下載cfg和weights文件

cd/usr/share/OpenCV/samples/bin/

導(dǎo)入上面下載的文件

cp/usr/share/OpenCV/samples/data/dnn/object_detection_classes_yolov3.txt/usr/share/OpenCV/samples/bin/

cp/usr/share/opencv4/testdata/dnn/yolov3.cfg/usr/share/OpenCV/samples/bin/./example_dnn_object_detection --width=1024 --height=1024 --scale=0.00392 --input=dog416.png --rgb --zoo=models.yml yolo

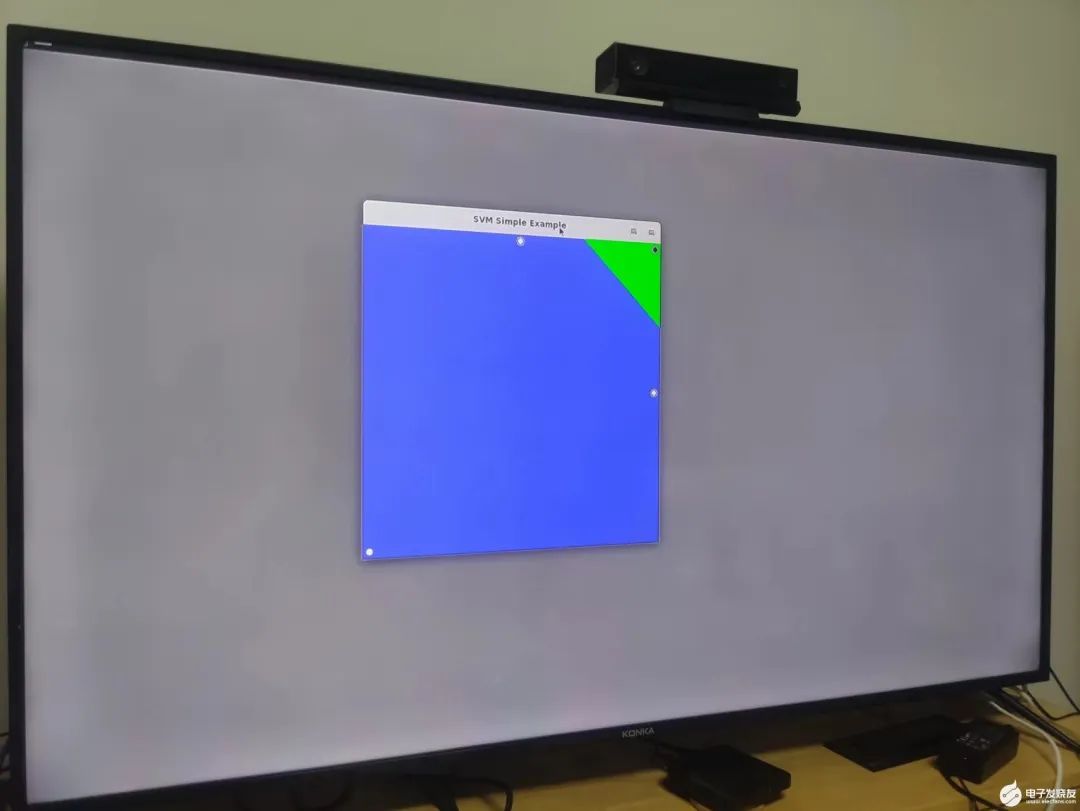

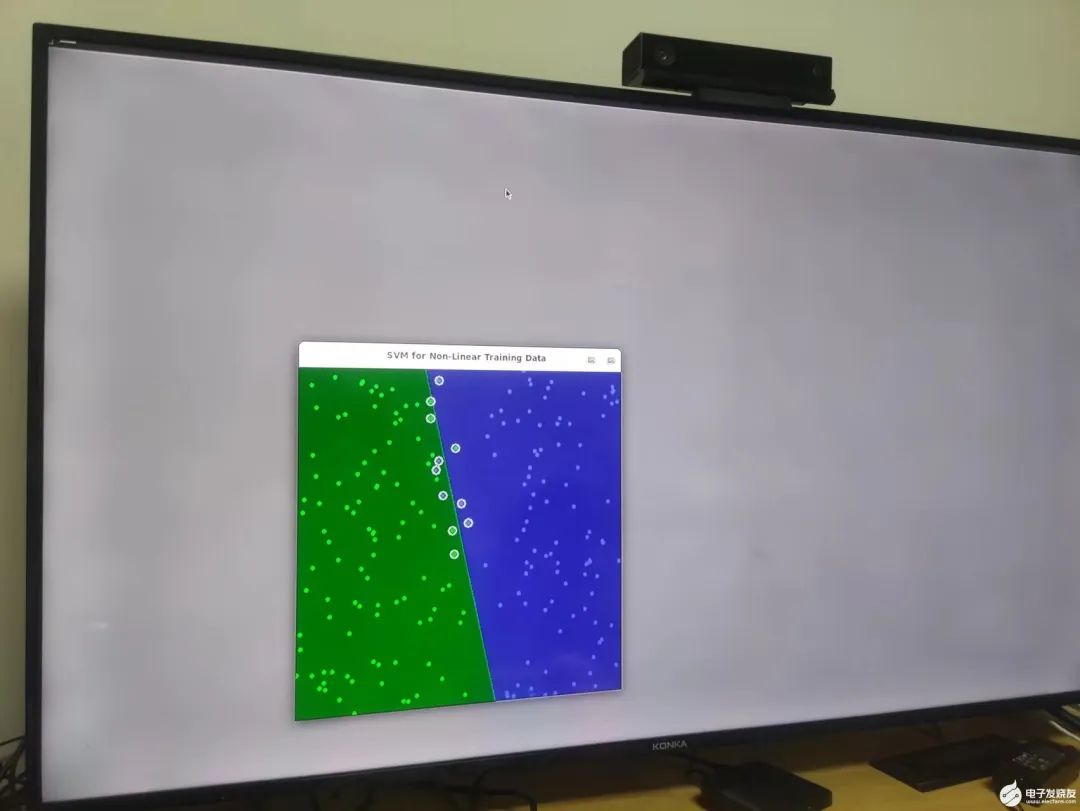

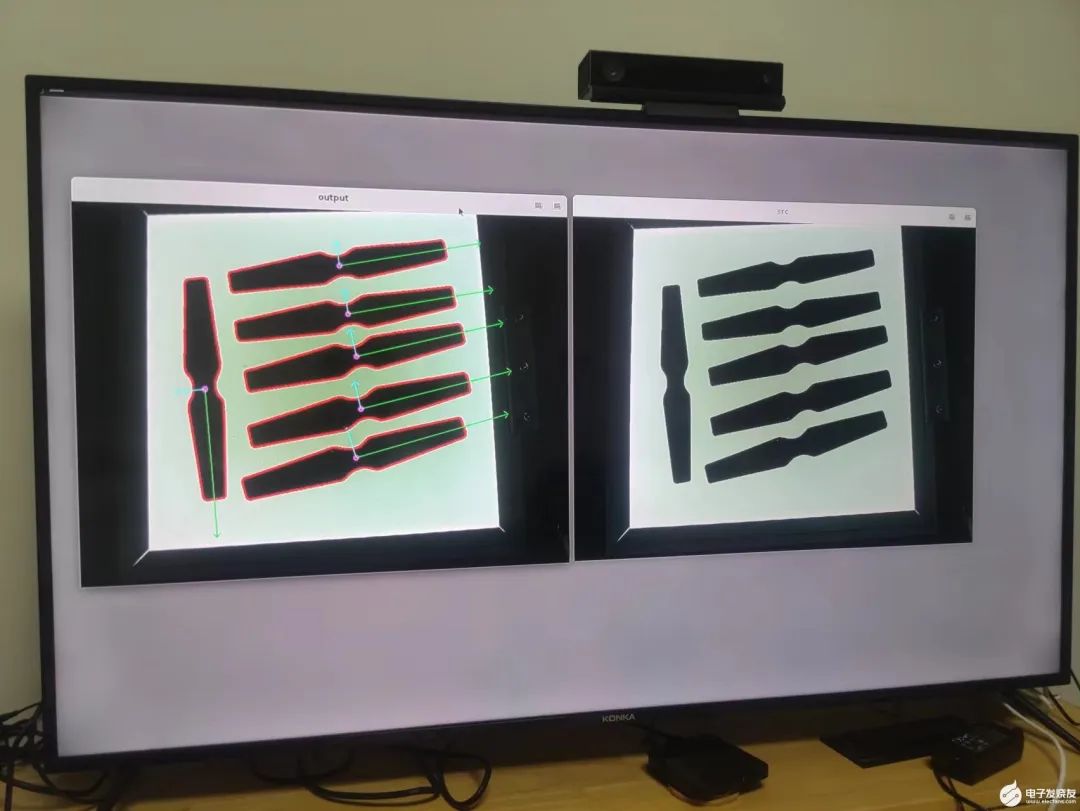

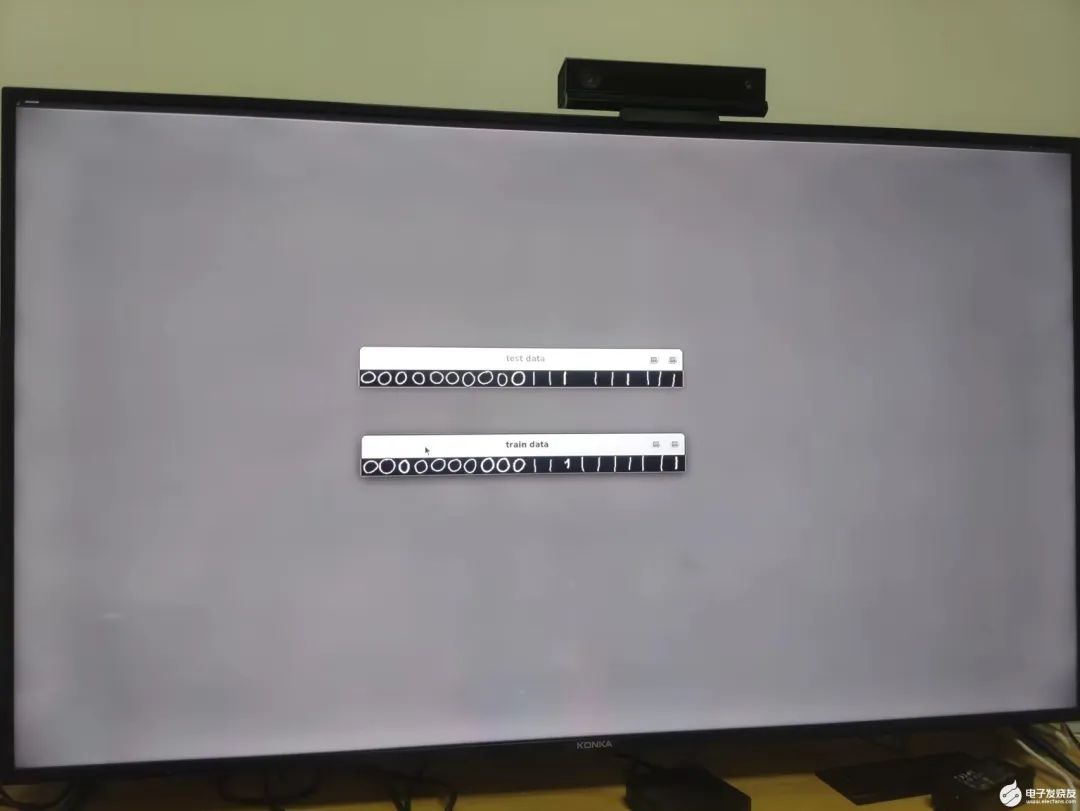

OpenCV經(jīng)典機(jī)器學(xué)

cd /usr/share/OpenCV/samples/bin

線性SVM

./example_tutorial_introduction_to_svm

非線性SVM

./example_tutorial_non_linear_svms

PCA分析

./example_tutorial_introduction_to_pca ../data/pca_test1.jpg

邏輯回歸

./example_cpp_logistic_regression

-

嵌入式開(kāi)發(fā)

+關(guān)注

關(guān)注

18文章

1071瀏覽量

48569

發(fā)布評(píng)論請(qǐng)先 登錄

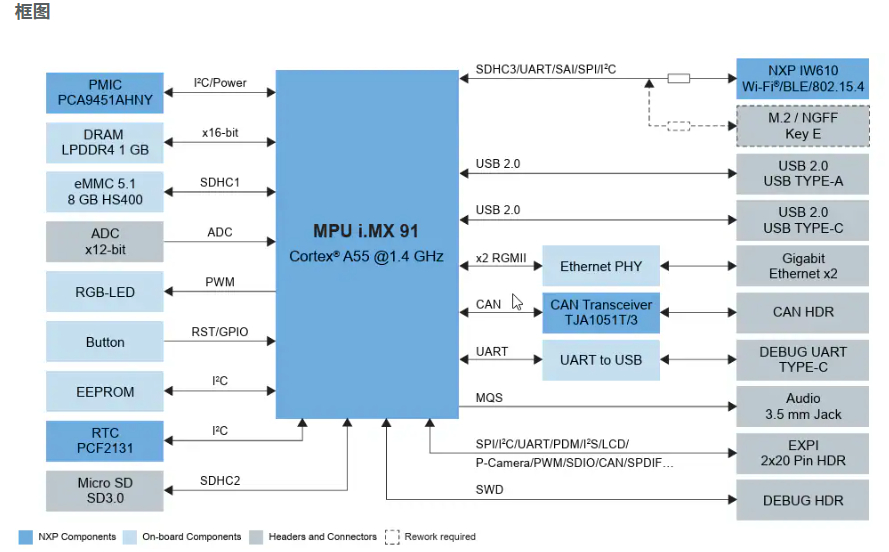

米爾NXP i.MX 91核心板發(fā)布,助力新一代入門(mén)級(jí)Linux應(yīng)用開(kāi)發(fā)

NXP i.MX 91開(kāi)發(fā)板#支持快速創(chuàng)建基于Linux?的邊緣器件

煥新登場(chǎng)!飛凌嵌入式FET-MX8MPQ-SMARC核心板發(fā)布

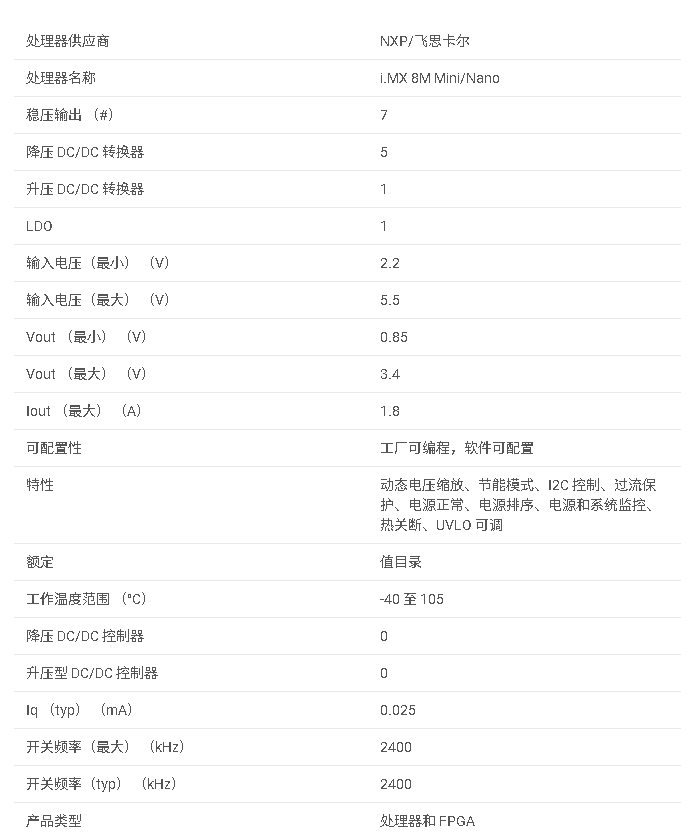

TPS6521825 適用于 NXP i.MX 8M mini 的電源管理 IC數(shù)據(jù)手冊(cè)

將Deepseek移植到i.MX 8MP|93 EVK的步驟

NXP基于i.MX 91應(yīng)用處理器打造的FRDM i.MX 91開(kāi)發(fā)板特性參數(shù)詳解

NXP i.MX 93 開(kāi)發(fā)板#提供高效的機(jī)器學(xué)習(xí) 支持高能效的邊緣計(jì)算

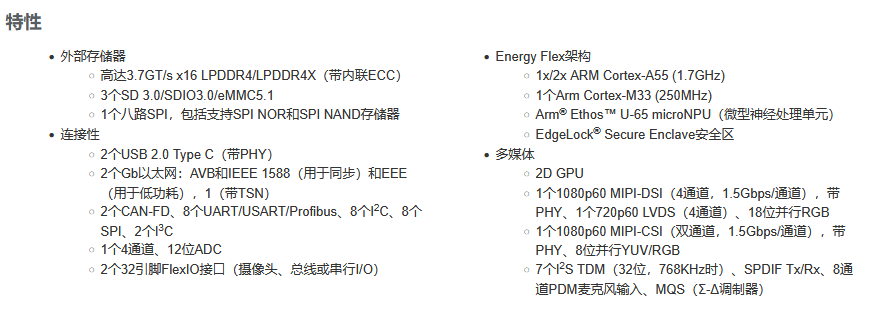

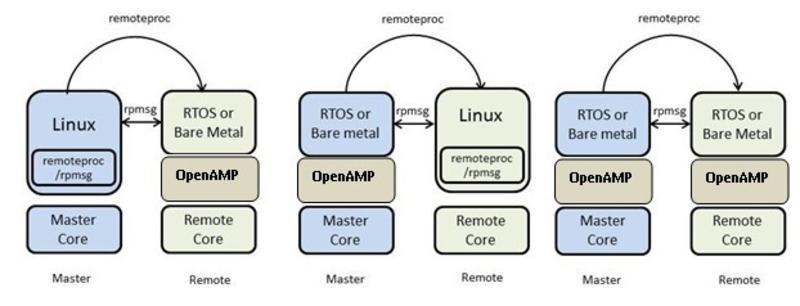

分享!基于NXP i.MX 8M Plus平臺(tái)的OpenAMP核間通信方案

NXP首款搭載MPU的FRDM產(chǎn)品怎么樣?FRDM i.MX93開(kāi)發(fā)板開(kāi)箱速覽

恩智浦推出FRDM i.MX 93開(kāi)發(fā)板

2.3T算力,真的強(qiáng)!1分鐘學(xué)會(huì)NPU開(kāi)發(fā),基于NXP i.MX 8MP平臺(tái)!

i.MX Linux開(kāi)發(fā)實(shí)戰(zhàn)指南—基于野火i.MX系列開(kāi)發(fā)板

使用TPS6521825和LP873347 PMIC為NXP i.MX 8M Mini和Nano供電

使用TPS65219為i.MX 8M Plus供電

NPU和CPU對(duì)比運(yùn)行速度有何不同?基于i.MX 8M Plus處理器的MYD-JX8MPQ開(kāi)發(fā)板

NPU和CPU對(duì)比運(yùn)行速度有何不同?基于i.MX 8M Plus處理器的MYD-JX8MPQ開(kāi)發(fā)板

評(píng)論