正如我們?cè)谇懊娴牡?19.2 節(jié)中看到的那樣,由于超參數(shù)配置的評(píng)估代價(jià)高昂,我們可能不得不等待數(shù)小時(shí)甚至數(shù)天才能在隨機(jī)搜索返回良好的超參數(shù)配置之前。在實(shí)踐中,我們經(jīng)常訪問(wèn)資源池,例如同一臺(tái)機(jī)器上的多個(gè) GPU 或具有單個(gè) GPU 的多臺(tái)機(jī)器。這就引出了一個(gè)問(wèn)題:我們?nèi)绾斡行У胤植茧S機(jī)搜索?

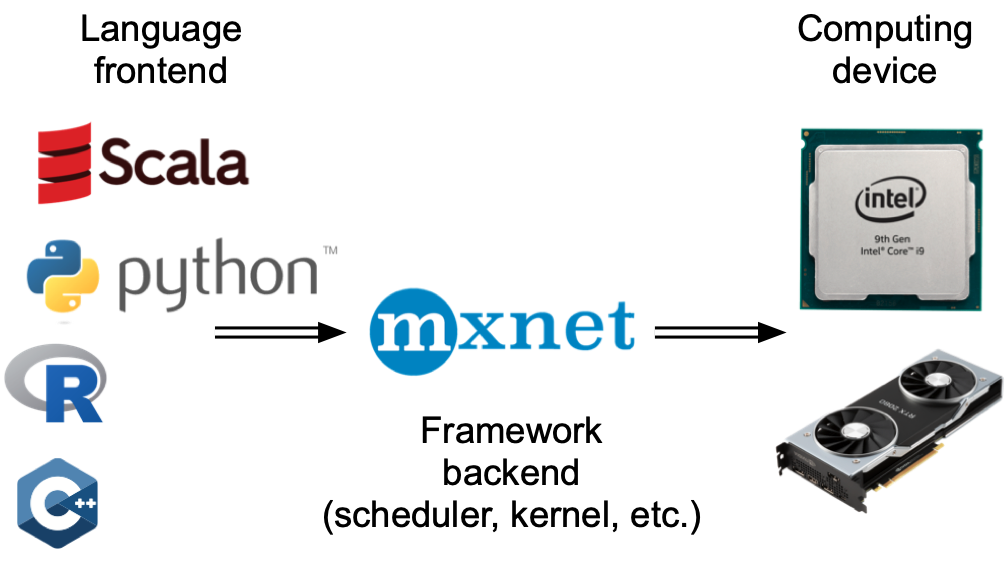

通常,我們區(qū)分同步和異步并行超參數(shù)優(yōu)化(見(jiàn)圖19.3.1)。在同步設(shè)置中,我們等待所有并發(fā)運(yùn)行的試驗(yàn)完成,然后再開(kāi)始下一批。考慮包含超參數(shù)的配置空間,例如過(guò)濾器的數(shù)量或深度神經(jīng)網(wǎng)絡(luò)的層數(shù)。包含更多過(guò)濾器層數(shù)的超參數(shù)配置自然會(huì)花費(fèi)更多時(shí)間完成,并且同一批次中的所有其他試驗(yàn)都必須在同步點(diǎn)(圖 19.3.1 中的灰色區(qū)域)等待,然后我們才能 繼續(xù)優(yōu)化過(guò)程。

在異步設(shè)置中,我們會(huì)在資源可用時(shí)立即安排新的試驗(yàn)。這將以最佳方式利用我們的資源,因?yàn)槲覀兛梢员苊馊魏瓮介_(kāi)銷。對(duì)于隨機(jī)搜索,每個(gè)新的超參數(shù)配置都是獨(dú)立于所有其他配置選擇的,特別是沒(méi)有利用任何先前評(píng)估的觀察結(jié)果。這意味著我們可以簡(jiǎn)單地異步并行化隨機(jī)搜索。這對(duì)于根據(jù)先前的觀察做出決定的更復(fù)雜的方法來(lái)說(shuō)并不是直截了當(dāng)?shù)模▍⒁?jiàn) 第 19.5 節(jié))。雖然我們需要訪問(wèn)比順序設(shè)置更多的資源,但異步隨機(jī)搜索表現(xiàn)出線性加速,因?yàn)檫_(dá)到了一定的性能K如果K試驗(yàn)可以并行進(jìn)行。

圖 19.3.1同步或異步分配超參數(shù)優(yōu)化過(guò)程。與順序設(shè)置相比,我們可以減少整體掛鐘時(shí)間,同時(shí)保持總計(jì)算量不變。在掉隊(duì)的情況下,同步調(diào)度可能會(huì)導(dǎo)致工人閑置。

在本筆記本中,我們將研究異步隨機(jī)搜索,其中試驗(yàn)在同一臺(tái)機(jī)器上的多個(gè) Python 進(jìn)程中執(zhí)行。分布式作業(yè)調(diào)度和執(zhí)行很難從頭開(kāi)始實(shí)現(xiàn)。我們將使用Syne Tune (Salinas等人,2022 年),它為我們提供了一個(gè)簡(jiǎn)單的異步 HPO 接口。Syne Tune 旨在與不同的執(zhí)行后端一起運(yùn)行,歡迎感興趣的讀者研究其簡(jiǎn)單的 API,以了解有關(guān)分布式 HPO 的更多信息。

import logging from d2l import torch as d2l logging.basicConfig(level=logging.INFO) from syne_tune import StoppingCriterion, Tuner from syne_tune.backend.python_backend import PythonBackend from syne_tune.config_space import loguniform, randint from syne_tune.experiments import load_experiment from syne_tune.optimizer.baselines import RandomSearch

INFO:root:SageMakerBackend is not imported since dependencies are missing. You can install them with pip install 'syne-tune[extra]' AWS dependencies are not imported since dependencies are missing. You can install them with pip install 'syne-tune[aws]' or (for everything) pip install 'syne-tune[extra]' AWS dependencies are not imported since dependencies are missing. You can install them with pip install 'syne-tune[aws]' or (for everything) pip install 'syne-tune[extra]' INFO:root:Ray Tune schedulers and searchers are not imported since dependencies are missing. You can install them with pip install 'syne-tune[raytune]' or (for everything) pip install 'syne-tune[extra]'

19.3.1。目標(biāo)函數(shù)

首先,我們必須定義一個(gè)新的目標(biāo)函數(shù),以便它現(xiàn)在通過(guò)回調(diào)將性能返回給 Syne Tune report。

def hpo_objective_lenet_synetune(learning_rate, batch_size, max_epochs): from syne_tune import Reporter from d2l import torch as d2l model = d2l.LeNet(lr=learning_rate, num_classes=10) trainer = d2l.HPOTrainer(max_epochs=1, num_gpus=1) data = d2l.FashionMNIST(batch_size=batch_size) model.apply_init([next(iter(data.get_dataloader(True)))[0]], d2l.init_cnn) report = Reporter() for epoch in range(1, max_epochs + 1): if epoch == 1: # Initialize the state of Trainer trainer.fit(model=model, data=data) else: trainer.fit_epoch() validation_error = trainer.validation_error().cpu().detach().numpy() report(epoch=epoch, validation_error=float(validation_error))

請(qǐng)注意,PythonBackendSyne Tune 需要在函數(shù)定義中導(dǎo)入依賴項(xiàng)。

19.3.2。異步調(diào)度器

首先,我們定義同時(shí)評(píng)估試驗(yàn)的工人數(shù)量。我們還需要通過(guò)定義總掛鐘時(shí)間的上限來(lái)指定我們想要運(yùn)行隨機(jī)搜索的時(shí)間。

n_workers = 2 # Needs to be <= the number of available GPUs max_wallclock_time = 12 * 60 # 12 minutes

接下來(lái),我們說(shuō)明要優(yōu)化的指標(biāo)以及我們是要最小化還是最大化該指標(biāo)。即,metric需要對(duì)應(yīng)傳遞給回調(diào)的參數(shù)名稱report。

mode = "min" metric = "validation_error"

我們使用前面示例中的配置空間。在 Syne Tune 中,該字典也可用于將常量屬性傳遞給訓(xùn)練腳本。我們利用此功能以通過(guò) max_epochs. 此外,我們指定要在 中評(píng)估的第一個(gè)配置initial_config。

config_space = {

"learning_rate": loguniform(1e-2, 1),

"batch_size": randint(32, 256),

"max_epochs": 10,

}

initial_config = {

"learning_rate": 0.1,

"batch_size": 128,

}

接下來(lái),我們需要指定作業(yè)執(zhí)行的后端。這里我們只考慮本地機(jī)器上的分布,其中并行作業(yè)作為子進(jìn)程執(zhí)行。但是,對(duì)于大規(guī)模 HPO,我們也可以在集群或云環(huán)境中運(yùn)行它,其中每個(gè)試驗(yàn)都會(huì)消耗一個(gè)完整的實(shí)例。

trial_backend = PythonBackend( tune_function=hpo_objective_lenet_synetune, config_space=config_space, )

BasicScheduler我們現(xiàn)在可以為異步隨機(jī)搜索創(chuàng)建調(diào)度程序,其行為與我們?cè)?第 19.2 節(jié)中的類似。

scheduler = RandomSearch( config_space, metric=metric, mode=mode, points_to_evaluate=[initial_config], )

INFO:syne_tune.optimizer.schedulers.fifo:max_resource_level = 10, as inferred from config_space INFO:syne_tune.optimizer.schedulers.fifo:Master random_seed = 4033665588

Syne Tune 還具有一個(gè)Tuner,其中主要的實(shí)驗(yàn)循環(huán)和簿記是集中的,調(diào)度程序和后端之間的交互是中介的。

stop_criterion = StoppingCriterion(max_wallclock_time=max_wallclock_time) tuner = Tuner( trial_backend=trial_backend, scheduler=scheduler, stop_criterion=stop_criterion, n_workers=n_workers, print_update_interval=int(max_wallclock_time * 0.6), )

讓我們運(yùn)行我們的分布式 HPO 實(shí)驗(yàn)。根據(jù)我們的停止標(biāo)準(zhǔn),它將運(yùn)行大約 12 分鐘。

tuner.run()

INFO:syne_tune.tuner:results of trials will be saved on /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691 INFO:root:Detected 8 GPUs INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.1 --batch_size 128 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/0/checkpoints INFO:syne_tune.tuner:(trial 0) - scheduled config {'learning_rate': 0.1, 'batch_size': 128, 'max_epochs': 10} INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.31642002803324326 --batch_size 52 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/1/checkpoints INFO:syne_tune.tuner:(trial 1) - scheduled config {'learning_rate': 0.31642002803324326, 'batch_size': 52, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 0 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.045813161553582046 --batch_size 71 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/2/checkpoints INFO:syne_tune.tuner:(trial 2) - scheduled config {'learning_rate': 0.045813161553582046, 'batch_size': 71, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 1 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.11375402103945391 --batch_size 244 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/3/checkpoints INFO:syne_tune.tuner:(trial 3) - scheduled config {'learning_rate': 0.11375402103945391, 'batch_size': 244, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 2 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.5211657199736571 --batch_size 47 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/4/checkpoints INFO:syne_tune.tuner:(trial 4) - scheduled config {'learning_rate': 0.5211657199736571, 'batch_size': 47, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 3 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.05259930532982774 --batch_size 181 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/5/checkpoints INFO:syne_tune.tuner:(trial 5) - scheduled config {'learning_rate': 0.05259930532982774, 'batch_size': 181, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 5 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.09086002421630578 --batch_size 48 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/6/checkpoints INFO:syne_tune.tuner:(trial 6) - scheduled config {'learning_rate': 0.09086002421630578, 'batch_size': 48, 'max_epochs': 10} INFO:syne_tune.tuner:tuning status (last metric is reported) trial_id status iter learning_rate batch_size max_epochs epoch validation_error worker-time 0 Completed 10 0.100000 128 10 10 0.258109 108.366785 1 Completed 10 0.316420 52 10 10 0.146223 179.660365 2 Completed 10 0.045813 71 10 10 0.311251 143.567631 3 Completed 10 0.113754 244 10 10 0.336094 90.168444 4 InProgress 8 0.521166 47 10 8 0.150257 156.696658 5 Completed 10 0.052599 181 10 10 0.399893 91.044401 6 InProgress 2 0.090860 48 10 2 0.453050 36.693606 2 trials running, 5 finished (5 until the end), 436.55s wallclock-time INFO:syne_tune.tuner:Trial trial_id 4 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.03542833641356924 --batch_size 94 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/7/checkpoints INFO:syne_tune.tuner:(trial 7) - scheduled config {'learning_rate': 0.03542833641356924, 'batch_size': 94, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 6 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.5941192130206245 --batch_size 149 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/8/checkpoints INFO:syne_tune.tuner:(trial 8) - scheduled config {'learning_rate': 0.5941192130206245, 'batch_size': 149, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 7 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.013696247675312455 --batch_size 135 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/9/checkpoints INFO:syne_tune.tuner:(trial 9) - scheduled config {'learning_rate': 0.013696247675312455, 'batch_size': 135, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 8 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.11837221527625114 --batch_size 75 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/10/checkpoints INFO:syne_tune.tuner:(trial 10) - scheduled config {'learning_rate': 0.11837221527625114, 'batch_size': 75, 'max_epochs': 10} INFO:syne_tune.tuner:Trial trial_id 9 completed. INFO:root:running subprocess with command: /home/d2l-worker/miniconda3/envs/d2l-en-release-0/bin/python /home/d2l-worker/miniconda3/envs/d2l-en-release-0/lib/python3.9/site-packages/syne_tune/backend/python_backend/python_entrypoint.py --learning_rate 0.18877290342981604 --batch_size 187 --max_epochs 10 --tune_function_root /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/tune_function --tune_function_hash 53504c42ecb95363b73ac1f849a8a245 --st_checkpoint_dir /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691/11/checkpoints INFO:syne_tune.tuner:(trial 11) - scheduled config {'learning_rate': 0.18877290342981604, 'batch_size': 187, 'max_epochs': 10} INFO:syne_tune.stopping_criterion:reaching max wallclock time (720), stopping there. INFO:syne_tune.tuner:Stopping trials that may still be running. INFO:syne_tune.tuner:Tuning finished, results of trials can be found on /home/d2l-worker/syne-tune/python-entrypoint-2023-02-10-04-56-21-691 -------------------- Resource summary (last result is reported): trial_id status iter learning_rate batch_size max_epochs epoch validation_error worker-time 0 Completed 10 0.100000 128 10 10.0 0.258109 108.366785 1 Completed 10 0.316420 52 10 10.0 0.146223 179.660365 2 Completed 10 0.045813 71 10 10.0 0.311251 143.567631 3 Completed 10 0.113754 244 10 10.0 0.336094 90.168444 4 Completed 10 0.521166 47 10 10.0 0.146092 190.111242 5 Completed 10 0.052599 181 10 10.0 0.399893 91.044401 6 Completed 10 0.090860 48 10 10.0 0.197369 172.148435 7 Completed 10 0.035428 94 10 10.0 0.414369 112.588123 8 Completed 10 0.594119 149 10 10.0 0.177609 99.182505 9 Completed 10 0.013696 135 10 10.0 0.901235 107.753385 10 InProgress 2 0.118372 75 10 2.0 0.465970 32.484881 11 InProgress 0 0.188773 187 10 - - - 2 trials running, 10 finished (10 until the end), 722.92s wallclock-time validation_error: best 0.1377706527709961 for trial-id 4 --------------------

存儲(chǔ)所有評(píng)估的超參數(shù)配置的日志以供進(jìn)一步分析。在調(diào)整工作期間的任何時(shí)候,我們都可以輕松獲得目前獲得的結(jié)果并繪制現(xiàn)任軌跡。

d2l.set_figsize() tuning_experiment = load_experiment(tuner.name) tuning_experiment.plot()

WARNING:matplotlib.legend:No artists with labels found to put in legend. Note that artists whose label start with an underscore are ignored when legend() is called with no argument.

19.3.3。可視化異步優(yōu)化過(guò)程

下面我們可視化每次試驗(yàn)的學(xué)習(xí)曲線(圖中的每種顏色代表一次試驗(yàn))在異步優(yōu)化過(guò)程中是如何演變的。在任何時(shí)間點(diǎn),同時(shí)運(yùn)行的試驗(yàn)數(shù)量與我們的工人數(shù)量一樣多。一旦一個(gè)試驗(yàn)結(jié)束,我們立即開(kāi)始下一個(gè)試驗(yàn),而不是等待其他試驗(yàn)完成。通過(guò)異步調(diào)度將工作人員的空閑時(shí)間減少到最低限度。

d2l.set_figsize([6, 2.5])

results = tuning_experiment.results

for trial_id in results.trial_id.unique():

df = results[results["trial_id"] == trial_id]

d2l.plt.plot(

df["st_tuner_time"],

df["validation_error"],

marker="o"

)

d2l.plt.xlabel("wall-clock time")

d2l.plt.ylabel("objective function")

Text(0, 0.5, 'objective function')

19.3.4。概括

我們可以通過(guò)跨并行資源的分布試驗(yàn)大大減少隨機(jī)搜索的等待時(shí)間。一般來(lái)說(shuō),我們區(qū)分同步調(diào)度和異步調(diào)度。同步調(diào)度意味著我們?cè)谇耙慌瓿珊髮?duì)新一批超參數(shù)配置進(jìn)行采樣。如果我們有掉隊(duì)者——比其他試驗(yàn)需要更多時(shí)間才能完成的試驗(yàn)——我們的工作人員需要在同步點(diǎn)等待。一旦資源可用,異步調(diào)度就會(huì)評(píng)估新的超參數(shù)配置,從而確保所有工作人員在任何時(shí)間點(diǎn)都很忙。雖然隨機(jī)搜索易于異步分發(fā)并且不需要對(duì)實(shí)際算法進(jìn)行任何更改,但其他方法需要進(jìn)行一些額外的修改。

19.3.5。練習(xí)

考慮DropoutMLP在 5.6 節(jié)中實(shí)現(xiàn)并在 19.2 節(jié)的練習(xí) 1 中使用的模型。

hpo_objective_dropoutmlp_synetune實(shí)施要與 Syne Tune 一起使用的目標(biāo)函數(shù) 。確保您的函數(shù)在每個(gè)時(shí)期后報(bào)告驗(yàn)證錯(cuò)誤。

使用19.2 節(jié)練習(xí) 1 的設(shè)置,將隨機(jī)搜索與貝葉斯優(yōu)化進(jìn)行比較。如果您使用 SageMaker,請(qǐng)隨意使用 Syne Tune 的基準(zhǔn)測(cè)試工具以并行運(yùn)行實(shí)驗(yàn)。提示:貝葉斯優(yōu)化作為syne_tune.optimizer.baselines.BayesianOptimization.

對(duì)于本練習(xí),您需要在至少具有 4 個(gè) CPU 內(nèi)核的實(shí)例上運(yùn)行。對(duì)于上面使用的方法之一(隨機(jī)搜索、貝葉斯優(yōu)化),使用n_workers=1、 n_workers=2、運(yùn)行實(shí)驗(yàn)n_workers=4并比較結(jié)果(現(xiàn)任軌跡)。至少對(duì)于隨機(jī)搜索,您應(yīng)該觀察到工人數(shù)量的線性比例。提示:為了獲得穩(wěn)健的結(jié)果,您可能必須對(duì)每次重復(fù)多次進(jìn)行平均。

進(jìn)階。本練習(xí)的目標(biāo)是在 Syne Tune 中實(shí)施新的調(diào)度程序。

創(chuàng)建一個(gè)包含 d2lbook 和 syne-tune 源的虛擬環(huán)境。

在 Syne Tune 中將第 19.2 節(jié)LocalSearcher中的練習(xí) 2 作為新的搜索器來(lái)實(shí)現(xiàn)。提示:閱讀 本教程。或者,您可以按照此 示例進(jìn)行操作。

將您的新產(chǎn)品LocalSearcher與RandomSearch基準(zhǔn) 進(jìn)行比較DropoutMLP。

Discussions

-

gpu

+關(guān)注

關(guān)注

28文章

4946瀏覽量

131231 -

異步

+關(guān)注

關(guān)注

0文章

62瀏覽量

18306 -

pytorch

+關(guān)注

關(guān)注

2文章

809瀏覽量

13960

發(fā)布評(píng)論請(qǐng)先 登錄

怎樣使用PyTorch Hub去加載YOLOv5模型

通過(guò)Cortex來(lái)非常方便的部署PyTorch模型

PyTorch教程12.4之隨機(jī)梯度下降

PyTorch教程12.5之小批量隨機(jī)梯度下降

PyTorch教程13.2之異步計(jì)算

PyTorch教程19.3之異步隨機(jī)搜索

PyTorch教程22.6之隨機(jī)變量

PyTorch教程-10.8。波束搜索

PyTorch教程-12.4。隨機(jī)梯度下降

PyTorch教程-13.2. 異步計(jì)算

PyTorch教程-19.3. 異步隨機(jī)搜索

PyTorch教程-19.3. 異步隨機(jī)搜索

評(píng)論