在我們對線性回歸的介紹中,我們介紹了各種組件,包括數(shù)據(jù)、模型、損失函數(shù)和優(yōu)化算法。事實(shí)上,線性回歸是最簡單的機(jī)器學(xué)習(xí)模型之一。然而,訓(xùn)練它使用許多與本書中其他模型所需的組件相同的組件。因此,在深入了解實(shí)現(xiàn)細(xì)節(jié)之前,有必要設(shè)計(jì)一些貫穿本書的 API。將深度學(xué)習(xí)中的組件視為對象,我們可以從為這些對象及其交互定義類開始。這種面向?qū)ο蟮膶?shí)現(xiàn)設(shè)計(jì)將極大地簡化演示,您甚至可能想在您的項(xiàng)目中使用它。

受PyTorch Lightning等開源庫的啟發(fā),在高層次上我們希望擁有三個(gè)類:(i)Module包含模型、損失和優(yōu)化方法;(ii)DataModule提供用于訓(xùn)練和驗(yàn)證的數(shù)據(jù)加載器;(iii) 兩個(gè)類結(jié)合使用該類 Trainer,這使我們能夠在各種硬件平臺上訓(xùn)練模型。本書中的大部分代碼都改編自Moduleand DataModule。Trainer只有在討論 GPU、CPU、并行訓(xùn)練和優(yōu)化算法時(shí),我們才會(huì)涉及該類。

import time import numpy as np import torch from torch import nn from d2l import torch as d2l

import time import numpy as np from mxnet.gluon import nn from d2l import mxnet as d2l

import time from dataclasses import field from typing import Any import jax import numpy as np from flax import linen as nn from flax.training import train_state from jax import numpy as jnp from d2l import jax as d2l

No GPU/TPU found, falling back to CPU. (Set TF_CPP_MIN_LOG_LEVEL=0 and rerun for more info.)

import time import numpy as np import tensorflow as tf from d2l import torch as d2l

3.2.1. 公用事業(yè)

我們需要一些實(shí)用程序來簡化 Jupyter 筆記本中的面向?qū)ο?a target="_blank">編程。挑戰(zhàn)之一是類定義往往是相當(dāng)長的代碼塊。筆記本電腦的可讀性需要簡短的代碼片段,穿插著解釋,這種要求與 Python 庫常見的編程風(fēng)格不相容。第一個(gè)實(shí)用函數(shù)允許我們在創(chuàng)建類后將函數(shù)注冊為類中的方法。事實(shí)上,即使我們已經(jīng)創(chuàng)建了類的實(shí)例,我們也可以這樣做!它允許我們將一個(gè)類的實(shí)現(xiàn)拆分成多個(gè)代碼塊。

def add_to_class(Class): #@save """Register functions as methods in created class.""" def wrapper(obj): setattr(Class, obj.__name__, obj) return wrapper

讓我們快速瀏覽一下如何使用它。我們計(jì)劃 A用一個(gè)方法來實(shí)現(xiàn)一個(gè)類do。我們可以先聲明類并創(chuàng)建一個(gè)實(shí)例,而不是在同一個(gè)代碼塊中A同時(shí) 擁有兩者的代碼。doAa

class A:

def __init__(self):

self.b = 1

a = A()

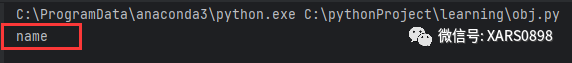

do接下來我們像往常一樣 定義方法,但不在 classA的范圍內(nèi)。相反,我們add_to_class用類A作為參數(shù)來裝飾這個(gè)方法。這樣做時(shí),該方法能夠訪問 的成員變量,A正如我們所期望的那樣,如果它已被定義為 的A定義的一部分。讓我們看看當(dāng)我們?yōu)閷?shí)例調(diào)用它時(shí)會(huì)發(fā)生什么a。

@add_to_class(A)

def do(self):

print('Class attribute "b" is', self.b)

a.do()

Class attribute "b" is 1

@add_to_class(A)

def do(self):

print('Class attribute "b" is', self.b)

a.do()

Class attribute "b" is 1

@add_to_class(A)

def do(self):

print('Class attribute "b" is', self.b)

a.do()

Class attribute "b" is 1

@add_to_class(A)

def do(self):

print('Class attribute "b" is', self.b)

a.do()

Class attribute "b" is 1

第二個(gè)是實(shí)用程序類,它將類 __init__方法中的所有參數(shù)保存為類屬性。這使我們無需額外代碼即可隱式擴(kuò)展構(gòu)造函數(shù)調(diào)用簽名。

class HyperParameters: #@save """The base class of hyperparameters.""" def save_hyperparameters(self, ignore=[]): raise NotImplemented

我們將其實(shí)施推遲到第 23.7 節(jié)。HyperParameters要使用它,我們定義繼承自該方法并調(diào)用 save_hyperparameters該方法的類__init__。

# Call the fully implemented HyperParameters class saved in d2l

class B(d2l.HyperParameters):

def __init__(self, a, b, c):

self.save_hyperparameters(ignore=['c'])

print('self.a =', self.a, 'self.b =', self.b)

print('There is no self.c =', not hasattr(self, 'c'))

b = B(a=1, b=2, c=3)

self.a = 1 self.b = 2 There is no self.c = True

# Call the fully implemented HyperParameters class saved in d2l

class B(d2l.HyperParameters):

def __init__(self, a, b, c):

self.save_hyperparameters(ignore=['c'])

print('self.a =', self.a, 'self.b =', self.b)

print('There is no self.c =', not hasattr(self, 'c'))

b = B(a=1, b=2, c=3)

self.a = 1 self.b = 2 There is no self.c = True

# Call the fully implemented HyperParameters class saved in d2l

class B(d2l.HyperParameters):

def __init__(self, a, b, c):

self.save_hyperparameters(ignore=['c'])

print('self.a =', self.a, 'self.b =', self.b)

print('There is no self.c =', not hasattr(self, 'c'))

b = B(a=1, b=2, c=3)

self.a = 1 self.b = 2 There is no self.c = True

# Call the fully implemented HyperParameters class saved in d2l

class B(d2l.HyperParameters):

def __init__(self, a, b, c):

self.save_hyperparameters(ignore=['c'])

print('self.a =', self.a, 'self.b =', self.b)

print('There is no self.c =', not hasattr(self, 'c'))

b = B(a=1, b=2, c=3)

self.a = 1 self.b = 2 There is no self.c = True

最后一個(gè)實(shí)用程序允許我們在實(shí)驗(yàn)進(jìn)行時(shí)以交互方式繪制實(shí)驗(yàn)進(jìn)度。為了尊重更強(qiáng)大(和復(fù)雜)的TensorBoard,我們將其命名為ProgressBoard。實(shí)現(xiàn)推遲到 第 23.7 節(jié)。現(xiàn)在,讓我們簡單地看看它的實(shí)際效果。

該方法在圖中 draw繪制一個(gè)點(diǎn),并在圖例中指定。可選的僅通過顯示來平滑線條(x, y)labelevery_n1/n圖中的點(diǎn)。他們的價(jià)值是從平均n原始圖中的鄰居點(diǎn)。

class ProgressBoard(d2l.HyperParameters): #@save

"""The board that plots data points in animation."""

def __init__(self, xlabel=None, ylabel=None, xlim=None,

ylim=None, xscale='linear', yscale='linear',

ls=['-', '--', '-.', ':'], colors=['C0', 'C1', 'C2', 'C3'],

fig=None, axes=None, figsize=(3.5, 2.5), display=True):

self.save_hyperparameters()

def draw(self, x, y, label, every_n=1):

raise NotImplemented

在下面的示例中,我們以不同的平滑度繪制sin和。cos如果你運(yùn)行這個(gè)代碼塊,你會(huì)看到線條在動(dòng)畫中增長。

board = d2l.ProgressBoard('x')

for x in np.arange(0, 10, 0.1):

board.draw(x, np.sin(x), 'sin', every_n=2)

board.draw(x, np.cos(x), 'cos', every_n=10)

board = d2l.ProgressBoard('x')

for x in np.arange(0, 10, 0.1):

board.draw(x, np.sin(x), 'sin', every_n=2)

board.draw(x, np.cos(x), 'cos', every_n=10)

board = d2l.ProgressBoard('x')

for x in np.arange(0, 10, 0.1):

board.draw(x, np.sin(x), 'sin', every_n=2)

board.draw(x, np.cos(x), 'cos', every_n=10)

board = d2l.ProgressBoard('x')

for x in np.arange(0, 10, 0.1):

board.draw(x, np.sin(x), 'sin', every_n=2)

board.draw(x, np.cos(x), 'cos', every_n=10)

3.2.2. 楷模

該類Module是我們將要實(shí)現(xiàn)的所有模型的基類。我們至少需要定義三個(gè)方法。該__init__方法存儲可學(xué)習(xí)參數(shù),該training_step方法接受數(shù)據(jù)批次以返回?fù)p失值,該方法configure_optimizers返回優(yōu)化方法或它們的列表,用于更新可學(xué)習(xí)參數(shù)。我們可以選擇定義 validation_step報(bào)告評估措施。有時(shí)我們將計(jì)算輸出的代碼放入一個(gè)單獨(dú)的forward方法中,以使其更具可重用性。

class Module(nn.Module, d2l.HyperParameters): #@save

"""The base class of models."""

def __init__(self, plot_train_per_epoch=2, plot_valid_per_epoch=1):

super().__init__()

self.save_hyperparameters()

self.board = ProgressBoard()

def loss(self, y_hat, y):

raise NotImplementedError

def forward(self, X):

assert hasattr(self, 'net'), 'Neural network is defined'

return self.net(X)

def plot(self, key, value, train):

"""Plot a point in animation."""

assert hasattr(self, 'trainer'), 'Trainer is not inited'

self.board.xlabel = 'epoch'

if train:

x = self.trainer.train_batch_idx /

self.trainer.num_train_batches

n = self.trainer.num_train_batches /

self.plot_train_per_epoch

else:

x = self.trainer.epoch + 1

n = self.trainer.num_val_batches /

self.plot_valid_per_epoch

self.board.draw(x, value.to(d2l.cpu()).detach().numpy(),

('train_' if train else 'val_') + key,

every_n=int(n))

def training_step(self, batch):

l = self.loss(self(*batch[:-1]), batch[-1])

self.plot('loss', l, train=True)

return l

def validation_step(self, batch):

l = self.loss(self(*batch[:-1]), batch[-1])

self.plot('loss', l, train=False)

def configure_optimizers(self):

raise NotImplementedError

您可能會(huì)注意到它Module是nn.ModulePyTorch 中神經(jīng)網(wǎng)絡(luò)基類的子類。它提供了方便的功能來處理神經(jīng)網(wǎng)絡(luò)。例如,如果我們定義一個(gè)forward方法,例如,那么對于一個(gè)實(shí)例,我們可以通過 調(diào)用這個(gè)方法。這是有效的,因?yàn)樗{(diào)用 內(nèi)置方法中的方法。您可以在第 6.1 節(jié)中找到更多詳細(xì)信息和示例。forward(self, X)aa(X)forward__call__nn.Module

class Module(nn.Block, d2l.HyperParameters): #@save

"""The base class of models."""

def __init__(self, plot_train_per_epoch=2, plot_valid_per_epoch=1):

super().__init__()

self.save_hyperparameters()

self.board = ProgressBoard()

def loss(self, y_hat, y):

raise NotImplementedError

def forward(self, X):

assert hasattr(self, 'net'), 'Neural network is defined'

return self.net(X)

def plot(self, key, value, train):

"""Plot a point in animation."""

assert hasattr(self, 'trainer'), 'Trainer is not inited'

self.board.xlabel = 'epoch'

if train:

x = self.trainer.train_batch_idx /

self.trainer.num_train_batches

n = self.trainer.num_train_batches /

self.plot_train_per_epoch

else:

x = self.trainer.epoch + 1

n = self.trainer.num_val_batches /

self.plot_valid_per_epoch

self.board.draw(x, value.asnumpy(), (

'train_' if train else 'val_') + key, every_n=int(n))

def training_step(self, batch):

l = self.loss(self(*batch[:-1]), batch[-1])

self.plot('loss', l, train=True)

return l

def validation_step(self, batch):

l = self.loss(self(*batch[:-1]), batch[-1])

self.plot('loss', l, train=False)

def configure_optimizers(self):

raise NotImplementedError

You may notice that Module is a subclass of nn.Block, the base class of neural networks in Gluon. It provides convenient features to handle neural networks. For example, if we define a forward method, such as forward(self, X), then for an instance a we can invoke this method by a(X). This works since it calls the forward method in the built-in __call__ method. You can find more details and examples about nn.Block in Section 6.1.

With the introduction of dataclasses in Python 3.7, classes decorated with @dataclass automatically add magic methods such as __init__ and __repr__. The member variables are defined using type annotations. All Flax modules are Python 3.7 dataclasses.

class Module(nn.Module, d2l.HyperParameters): #@save

"""The base class of models."""

# No need for save_hyperparam when using Python dataclass

plot_train_per_epoch: int = field(default=2, init=False)

plot_valid_per_epoch: int = field(default=1, init=False)

# Use default_factory to make sure new plots are generated on each run

board: ProgressBoard = field(default_factory=lambda: ProgressBoard(),

init=False)

def loss(self, y_hat, y):

raise NotImplementedError

# JAX & Flax do not have a forward-method-like syntax. Flax uses setup

# and built-in __call__ magic methods for forward pass. Adding here

# for consistency

def forward(self, X, *args, **kwargs):

assert hasattr(self, 'net'), 'Neural network is defined'

return self.net(X, *args, **kwargs)

def __call__(self, X, *args, **kwargs):

return self.forward(X, *args, **kwargs)

def plot(self, key, value, train):

"""Plot a point in animation."""

assert hasattr(self, 'trainer'), 'Trainer is not inited'

self.board.xlabel = 'epoch'

if train:

x = self.trainer.train_batch_idx /

self.trainer.num_train_batches

n = self.trainer.num_train_batches /

self.plot_train_per_epoch

else:

x = self.trainer.epoch + 1

n = self.trainer.num_val_batches /

self.plot_valid_per_epoch

self.board.draw(x, jax.device_put(value, d2l.cpu()),

('train_' if train else 'val_') + key,

every_n=int(n))

def training_step(self, params, batch, state):

l, grads = jax.value_and_grad(self.loss)(params, batch[:-1],

batch[-1], state)

self.plot("loss", l, train=True)

return l, grads

def validation_step(self, params, batch, state):

l = self.loss(params, batch[:-1], batch[-1], state)

self.plot('loss', l, train=False)

def apply_init(self, dummy_input, key):

"""To be defined later in :numref:`sec_lazy_init`"""

raise NotImplementedError

def configure_optimizers(self):

raise NotImplementedError

You may notice that Module is a subclass of linen.Module, the base class of neural networks in Flax. It provides convenient features to handle neural networks. For example, it handles the model parameters, provides the nn.compact decorator to simplify code, invokes the __call__ method among other things. Here we also redirect __call__ to the forward method. We do this to make our code more similar to other framework implementations.

class Module(tf.keras.Model, d2l.HyperParameters): #@save

"""The base class of models."""

def __init__(self, plot_train_per_epoch=2, plot_valid_per_epoch=1):

super().__init__()

self.save_hyperparameters()

self.board = ProgressBoard()

self.training = None

def loss(self, y_hat, y):

raise NotImplementedError

def forward(self, X):

assert hasattr(self, 'net'), 'Neural network is defined'

return self.net(X)

def call(self, X, *args, **kwargs):

if kwargs and "training" in kwargs:

self.training = kwargs['training']

return self.forward(X, *args)

def plot(self, key, value, train):

"""Plot a point in animation."""

assert hasattr(self, 'trainer'), 'Trainer is not inited'

self.board.xlabel = 'epoch'

if train:

x = self.trainer.train_batch_idx /

self.trainer.num_train_batches

n = self.trainer.num_train_batches /

self.plot_train_per_epoch

else:

x = self.trainer.epoch + 1

n = self.trainer.num_val_batches /

self.plot_valid_per_epoch

self.board.draw(x, value.numpy(), (

'train_' if train else 'val_') + key, every_n=int(n))

def training_step(self, batch):

l = self.loss(self(*batch[:-1]), batch[-1])

self.plot('loss', l, train=True)

return l

def validation_step(self, batch):

l = self.loss(self(*batch[:-1]), batch[-1])

self.plot('loss', l, train=False)

def configure_optimizers(self):

raise NotImplementedError

You may notice that Module is a subclass of tf.keras.Model, the base class of neural networks in TensorFlow. It provides convenient features to handle neural networks. For example, it invokes the call method in the built-in __call__ method. Here we redirect call to the forward method, saving its arguments as a class attribute. We do this to make our code more similar to other framework implementations.

3.2.3. 數(shù)據(jù)

該類DataModule是數(shù)據(jù)的基類。該方法經(jīng)常__init__用于準(zhǔn)備數(shù)據(jù)。如果需要,這包括下載和預(yù)處理。返回train_dataloader 訓(xùn)練數(shù)據(jù)集的數(shù)據(jù)加載器。數(shù)據(jù)加載器是一個(gè) (Python) 生成器,每次使用時(shí)都會(huì)生成一個(gè)數(shù)據(jù)批次。然后將該批次輸入到計(jì)算損失training_step的方法中。Module有一個(gè)val_dataloader返回驗(yàn)證數(shù)據(jù)集加載器的選項(xiàng)。它的行為方式相同,只是它為validation_step中的方法生成數(shù)據(jù)批次Module。

class DataModule(d2l.HyperParameters): #@save

"""The base class of data."""

def __init__(self, root='../data', num_workers=4):

self.save_hyperparameters()

def get_dataloader(self, train):

raise NotImplementedError

def train_dataloader(self):

return self.get_dataloader(train=True)

def val_dataloader(self):

return self.get_dataloader(train=False)

class DataModule(d2l.HyperParameters): #@save

"""The base class of data."""

def __init__(self, root='../data', num_workers=4):

self.save_hyperparameters()

def get_dataloader(self, train):

raise NotImplementedError

def train_dataloader(self):

return self.get_dataloader(train=True)

def val_dataloader(self):

return self.get_dataloader(train=False)

class DataModule(d2l.HyperParameters): #@save

"""The base class of data."""

def __init__(self, root='../data'):

self.save_hyperparameters()

def get_dataloader(self, train):

raise NotImplementedError

def train_dataloader(self):

return self.get_dataloader(train=True)

def val_dataloader(self):

return self.get_dataloader(train=False)

class DataModule(d2l.HyperParameters): #@save

"""The base class of data."""

def __init__(self, root='../data'):

self.save_hyperparameters()

def get_dataloader(self, train):

raise NotImplementedError

def train_dataloader(self):

return self.get_dataloader(train=True)

def val_dataloader(self):

return self.get_dataloader(train=False)

3.2.4. 訓(xùn)練

該類 使用中指定的數(shù)據(jù)Trainer訓(xùn)練類中的可學(xué)習(xí)參數(shù)。關(guān)鍵方法是,它接受兩個(gè)參數(shù):,一個(gè)實(shí)例,和 ,一個(gè)實(shí)例。然后它遍歷整個(gè)數(shù)據(jù)集時(shí)間來訓(xùn)練模型。和以前一樣,我們將把這個(gè)方法的實(shí)現(xiàn)推遲到后面的章節(jié)。ModuleDataModulefitmodelModuledataDataModulemax_epochs

class Trainer(d2l.HyperParameters): #@save

"""The base class for training models with data."""

def __init__(self, max_epochs, num_gpus=0, gradient_clip_val=0):

self.save_hyperparameters()

assert num_gpus == 0, 'No GPU support yet'

def prepare_data(self, data):

self.train_dataloader = data.train_dataloader()

self.val_dataloader = data.val_dataloader()

self.num_train_batches = len(self.train_dataloader)

self.num_val_batches = (len(self.val_dataloader)

if self.val_dataloader is not None else 0)

def prepare_model(self, model):

model.trainer = self

model.board.xlim = [0, self.max_epochs]

self.model = model

def fit(self, model, data):

self.prepare_data(data)

self.prepare_model(model)

self.optim = model.configure_optimizers()

self.epoch = 0

self.train_batch_idx = 0

self.val_batch_idx = 0

for self.epoch in range(self.max_epochs):

self.fit_epoch()

def fit_epoch(self):

raise NotImplementedError

The Trainer class trains the learnable parameters in the Module class with data specified in DataModule. The key method is fit, which accepts two arguments: model, an instance of Module, and data, an instance of DataModule. It then iterates over the entire dataset max_epochs times to train the model. As before, we will defer the implementation of this method to later chapters.

class Trainer(d2l.HyperParameters): #@save

"""The base class for training models with data."""

def __init__(self, max_epochs, num_gpus=0, gradient_clip_val=0):

self.save_hyperparameters()

assert num_gpus == 0, 'No GPU support yet'

def prepare_data(self, data):

self.train_dataloader = data.train_dataloader()

self.val_dataloader = data.val_dataloader()

self.num_train_batches = len(self.train_dataloader)

self.num_val_batches = (len(self.val_dataloader)

if self.val_dataloader is not None else 0)

def prepare_model(self, model):

model.trainer = self

model.board.xlim = [0, self.max_epochs]

self.model = model

def fit(self, model, data):

self.prepare_data(data)

self.prepare_model(model)

self.optim = model.configure_optimizers()

self.epoch = 0

self.train_batch_idx = 0

self.val_batch_idx = 0

for self.epoch in range(self.max_epochs):

self.fit_epoch()

def fit_epoch(self):

raise NotImplementedError

The Trainer class trains the learnable parameters params with data specified in DataModule. The key method is fit, which accepts three arguments: model, an instance of Module, data, an instance of DataModule, and key, a JAX PRNGKeyArray. We make the key argument optional here to simplify the interface, but it is recommended to always pass and initialize the model parameters with a root key in JAX and Flax. It then iterates over the entire dataset max_epochs times to train the model. As before, we will defer the implementation of this method to later chapters.

class Trainer(d2l.HyperParameters): #@save

"""The base class for training models with data."""

def __init__(self, max_epochs, num_gpus=0, gradient_clip_val=0):

self.save_hyperparameters()

assert num_gpus == 0, 'No GPU support yet'

def prepare_data(self, data):

self.train_dataloader = data.train_dataloader()

self.val_dataloader = data.val_dataloader()

self.num_train_batches = len(self.train_dataloader)

self.num_val_batches = (len(self.val_dataloader)

if self.val_dataloader is not None else 0)

def prepare_model(self, model):

model.trainer = self

model.board.xlim = [0, self.max_epochs]

self.model = model

def fit(self, model, data, key=None):

self.prepare_data(data)

self.prepare_model(model)

self.optim = model.configure_optimizers()

if key is None:

root_key = d2l.get_key()

else:

root_key = key

params_key, dropout_key = jax.random.split(root_key)

key = {'params': params_key, 'dropout': dropout_key}

dummy_input = next(iter(self.train_dataloader))[:-1]

variables = model.apply_init(dummy_input, key=key)

params = variables['params']

if 'batch_stats' in variables.keys():

# Here batch_stats will be used later (e.g., for batch norm)

batch_stats = variables['batch_stats']

else:

batch_stats = {}

# Flax uses optax under the hood for a single state obj TrainState.

# More will be discussed later in the dropout and batch

# normalization section

class TrainState(train_state.TrainState):

batch_stats: Any

dropout_rng: jax.random.PRNGKeyArray

self.state = TrainState.create(apply_fn=model.apply,

params=params,

batch_stats=batch_stats,

dropout_rng=dropout_key,

tx=model.configure_optimizers())

self.epoch = 0

self.train_batch_idx = 0

self.val_batch_idx = 0

for self.epoch in range(self.max_epochs):

self.fit_epoch()

def fit_epoch(self):

raise NotImplementedError

The Trainer class trains the learnable parameters in the Module class with data specified in DataModule. The key method is fit, which accepts two arguments: model, an instance of Module, and data, an instance of DataModule. It then iterates over the entire dataset max_epochs times to train the model. As before, we will defer the implementation of this method to later chapters.

class Trainer(d2l.HyperParameters): #@save

"""The base class for training models with data."""

def __init__(self, max_epochs, num_gpus=0, gradient_clip_val=0):

self.save_hyperparameters()

assert num_gpus == 0, 'No GPU support yet'

def prepare_data(self, data):

self.train_dataloader = data.train_dataloader()

self.val_dataloader = data.val_dataloader()

self.num_train_batches = len(self.train_dataloader)

self.num_val_batches = (len(self.val_dataloader)

if self.val_dataloader is not None else 0)

def prepare_model(self, model):

model.trainer = self

model.board.xlim = [0, self.max_epochs]

self.model = model

def fit(self, model, data):

self.prepare_data(data)

self.prepare_model(model)

self.optim = model.configure_optimizers()

self.epoch = 0

self.train_batch_idx = 0

self.val_batch_idx = 0

for self.epoch in range(self.max_epochs):

self.fit_epoch()

def fit_epoch(self):

raise NotImplementedError

3.2.5. 概括

為了突出我們未來深度學(xué)習(xí)實(shí)現(xiàn)的面向?qū)ο笤O(shè)計(jì),上面的類只是展示了它們的對象如何存儲數(shù)據(jù)和相互交互。@add_to_class我們將在本書的其余部分繼續(xù)豐富這些類的實(shí)現(xiàn),例如 via 。此外,這些完全實(shí)現(xiàn)的類保存在d2l 庫中,d2l 庫是一個(gè) 輕量級工具包,可以輕松進(jìn)行深度學(xué)習(xí)的結(jié)構(gòu)化建模。特別是,它有助于在項(xiàng)目之間重用許多組件,而無需進(jìn)行太多更改。例如,我們可以只替換優(yōu)化器、模型、數(shù)據(jù)集等;這種程度的模塊化在簡潔和簡單方面為整本書帶來了好處(這就是我們添加它的原因),它可以為您自己的項(xiàng)目做同樣的事情。

3.2.6. 練習(xí)

找到保存在d2l 庫中的上述類的完整實(shí)現(xiàn)。我們強(qiáng)烈建議您在對深度學(xué)習(xí)建模有一定的了解后,再詳細(xì)查看實(shí)現(xiàn)。

刪除類save_hyperparameters中的語句B。你還能打印self.aandself.b嗎?可選:如果您已經(jīng)深入了解該類的完整實(shí)現(xiàn)HyperParameters,您能解釋一下原因嗎?

-

pytorch

+關(guān)注

關(guān)注

2文章

809瀏覽量

13758

發(fā)布評論請先 登錄

利用LabVIEW工程庫實(shí)現(xiàn)面向對象編程

LabVIEW面向對象的ActorFramework(1)

LabVIEW面向對象的ActorFramework(2)

Labview面向對象的思考方式

如何用C語言實(shí)現(xiàn)面向對象編程

c語言實(shí)現(xiàn)面向對象編程 精選資料分享

談?wù)?b class='flag-5'>面向對象編程

面向對象編程語言的特點(diǎn)

plc面向對象編程架構(gòu)與實(shí)現(xiàn)

利用Python和PyTorch處理面向對象的數(shù)據(jù)集

利用 Python 和 PyTorch 處理面向對象的數(shù)據(jù)集(2)) :創(chuàng)建數(shù)據(jù)集對象

PyTorch教程3.2之面向對象的設(shè)計(jì)實(shí)現(xiàn)

PyTorch教程14.6之對象檢測數(shù)據(jù)集

PyTorch教程-3.2. 面向?qū)ο蟮脑O(shè)計(jì)實(shí)現(xiàn)

PyTorch教程-3.2. 面向?qū)ο蟮脑O(shè)計(jì)實(shí)現(xiàn)

評論